Some Official Robot Ethics Were Released in Britain

Let’s not create racist murder bots.

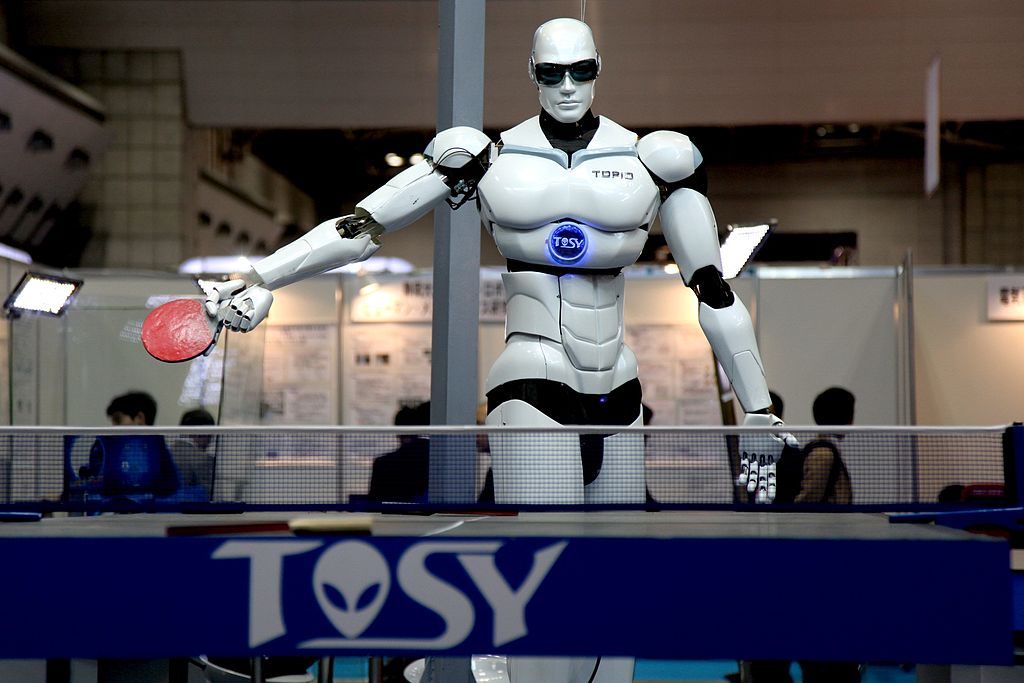

Does it hate you? (Photo: Humanrobo/CC BY-SA 3.0)

In another step towards the science fiction future many of us have been looking forward to, a British watchdog agency has released what might be the first set of laws regarding ethical robot creation. As the Guardian reports, the British Standards Institute (BSI) released their paper on the best practices of robot creation, addressing everything from physical safety to racism.

Since the early 20th century, Isaac Asimov’s Three Laws of Robotics have stood as the theoretical moral baseline for a future where robots have much the same agency as humans. But this new paper gets a bit more detailed. Asimov’s fictional laws state that, 1. A robot cannot harm a human being; 2. It must obey the commands of human beings; and 3. That it must protect itself. When Asimov came up with these laws within his science fiction stories, such a future seemed a million miles away, but today it seems right around the corner, and his edicts a bit simple.

BSI is the decider of national engineering standards in the UK, and they decided to take a serious look at our robotic future, and get the jump on making sure it isn’t a nightmare. Working with a panel of scientists, ethicists, philosophers, and more, they developed BS 8611:2016, a document that will hopefully guide roboticists in the right direction.

Among the issues brought up by the paper, it addresses robots that could cause physical harm. As quoted in the Guardian, “Robots should not be designed solely or primarily to kill or harm humans; humans, not robots, are the responsible agents.” It also looks at over-dependence on robots and the dangers of creating emotional connections with them, especially as more automated caregiving automatons are introduced. It also warns of “deep learning” robots, which use the entirety of the internet as a knowledge base, could develop their own commands and alter the their own programming in response to their calculations, creating strange or unforeseen actions.

Maybe the most immediate and troubling point the standard explores is the robotic tendency towards dehumanization and even accidental bigotry. As facial recognition technology becomes more standard there are already issues with its ability to identify different races, and could easily be programmed, either willingly or unconsciously, to have a bias. As robotics are more seamlessly integrated into areas like medicine and policing, robotic thinking (literally) can create major issues.

For now much of BS 8611:2016 regards a future that is right around the corner, but like Asimov realized, it’s never too early to start planning to avoid killer robots.

Follow us on Twitter to get the latest on the world's hidden wonders.

Like us on Facebook to get the latest on the world's hidden wonders.

Follow us on Twitter Like us on Facebook